Archivematica Alpha MVP

Goals and Objectives

Goals

- Describe the workflows that will be supported in the Minimum Viable Product (MVP) preservation platform in a form that can be shared with the HEI pilots.

- Show the Higher Eductation Institution (HEI) pilots some of the ways in which digital preservation can fit into the wider research data management lifecycle of data upload, description, publication, access and re-use.

Objectives

- Describe and agree with Jisc two example research data digital preservation workflows that will be supported by the RDSS preservation platform MVP (Alpha stage of the project).

- Provide early visibility and transparency to the HEI pilots of what Arkivum/Artefactual/Jisc are aiming for in Lot 5 (digital preservation platform)

- Describe the workflows in a form that is easy to understand by non-technical people at the HEI pilots.

- Define workflows that will enable HEIs to explore the range of functionality provided by the Archivematica tool so they will then better understand what is possible and what further features need to be added in the beta.

- Provide a concrete foundation for Arkivum/Artefactual/Jisc to define and agree the specific integration needed between the preservation platform and the rest of the RDSS, e.g. events, data model, data transfers etc.

- Identify alternative or enhanced research data preservation workflows that could be supported in the beta phase of the project.

Terminology

The following terminology is used in the workflow descriptions

End-user roles:

- Researcher Someone who generates research data as part of their research and needs to deposit/publish this research data using the Jisc RDSS. The researcher might be someone doing the research on a day-to-day basis or it might be a Principal Investigator (PI) who is responsible for the research.

- Research Manager Someone who is responsible at an Institution for the long-term management, quality, compliance, accessibility and usability of research data generated by Researchers at their institution. The Research Manager could be a member of a specific Faculty or Dept or they could be part of a centralised team, e.g. Library, Governance or Research Office.

- Research Data User Someone who wants to use research data that is held by the RDSS. For example, a Research Data User might want to use data to do further research of their own, to validate the original research as done by the Researcher, or as part of peer review of a publication describing some research that is based on the data.

The terms 'Researcher', 'Research Manager' and 'Research Data User' are found in the HEI pilot user stories . Not all HEIs use the same terminology, for example other terms used by the HEIs include 'Data manager' and 'Digital Curator'.

Entities:

- RDSS Messaging System The part of the RDSS that is used to send messages to/from the various components of the RDSS platform such as Repository, Preservation System and Dashboard.

- Research Data Repository A place where research dataset records are created, stored and made accessible.

- Research Dataset One or more Data Files that contain research data that is related in some way, e.g. a set of images from an equipment run on a given sample, a set of oral history interviews, a set of observations from sensors on a glacier.

- Data File A bitstream that constitutes a single file within a research dataset. The bitstream is in a file-format (zip, pdf, csv, jpeg etc.).

- Preservation System A place where Research Datasets can be processed to so that they continue to be accessible and usable over time. This includes processing to:

(a) help understand the technical aspects of the dataset, e.g. the file formats being used,

(b) conversion of files into more open or longer lived formats, e.g. normalisation, and

(c) packaging of files and metadata in the dataset into containers that can be used to ensure the long-term authenticity and integrity of the dataset, e.g. Archive Information Packages.

Note that many of the terms above have yet to be defined in the canonical data model and are therefore working definitions in Lot5 pending a project wide glossary.

Preamble and Context

Our Assumptions and Approach to Preservation of Research Data

When considering workflows for digital preservation of research data, we recognise that this may not be a top priority for Researchers. Instead, Researchers typically want to get on with doing new research, publishing their results, collaborating with other researchers, getting citations and kudos, submitting successful grant applications etc. Researchers should, in the ideal world, be very concerned about the long-term usability of their research data and actively want to do digital preservation. This is a very real part part of making sure data is Findable, Accessible, Interoperable and Reusable (FAIR) [2] and following good practice in many disciplines, e.g. Good Research Practice (GRP) [1] for medical research. It is also necessary to meet funding body requirements such as those stipulated by the EPSRC, which in turn are based on the UK Research Councils (RCUK) principles [4]. However, in many cases, there is still a job of work to be done to educate and incentivise Researchers to be more involved in the longeivity of their research data. Therefore, in many insitutions the Researchers expect that their host institution will take on the responsibility and activity of digital preservation, especially where digital preservation of research data is a complex and requires specialist skills and tools.

As a result, our starting point is a pragmatic one that reflects the real-world where the typical workflow in many institutions is for the Researcher to be concerned with initial deposit and publication of Research Data and the Institution's Research Data Manager to be concerned with the appraisal, preservation and long-term accessibility and usability of the Research Data.

##The Minimum Viable Product Approach

For the Alpha phase of the project, we are aiming to enable two example workflows that:

- Are achievable by June 2017

- Are a reasonable match to the way that institutions are likely to want to approach digital preservation

- Allow the HEIs to better understand and explore the options so that we can get good feedback on what needs to change or be added for the beta stage of the project.

Automated preservation workflow:

In some cases, the Institution will lack resources (staff, skills, budget, time) to do a comprehensive job of preserving all their research datasets and will instead want a low-cost, fully automated, 'black box' approach to digital preservation of at least some of their data. In a sense, they want a 'preservation sausage machine' whereby research data is fed in at one end and out of the other comes 'preservation packages' containing the research data in a form that is better described and structured for long-term usability.

###Interactive preservation workflow:

In other cases, the Institution will want to work closely with both the Research Data and the Researcher as part of an iterative process of quality control and digital preservation with the end result that the Research Data is better documented from a technical perspective, is transformed into more open formats, is validated as complete and correct, and is signed-off as being fit for publication. This is a more interactive and resource intensive process than 'automated' preservation, but can yield better results and may be more appropriate for specific types of research or institution.

The most appropriate workflow to use will depend on many factors, e.g. the experience an institution has with digital preservation, the resources at its disposal, the research discipline or type of data involved, the requirements of the research funder, the institutions policy and so on. For example, the interactive preservation workflow will require some experience with digital preservation, potentially including how to use of some Archivematica's preservation planning features and how to configure its preservation pipeline. On the other hand, our aim for the automated preservation workflow is to preconfigure the settings so preservation can be done 'out of the box' to as great an extent as possible.

Some institutions may want to use both workflows, e.g. bulk process some of their research data using the 'sausage machine' approach whilst using the 'interactive workflow' for specific high value or problematic datasets.

###Digital Preservation Capabilities:

The workflows described above focus on the critical features and integrations required for preservation within a research data shared service (RDSS). There are many standard ('out of the box') capabilities for digital preservation that are provided by Archivematica and not described there that may be valuable for different institutions. More information on Arhivematica's capabilities is provided in the Digital Preservation Capabilities section. Some of these features may need further work and integration with the RDSS for their full utility to be realised. This is explored on the Beyond the MVP section. With those caveats in mind, we'd still very much encourage pilot participants to explore these capabilities to help us better understand what work will be required to move to a Beta phase of the service and beyond.

[1] MRC GRP: https://www.mrc.ac.uk/publications/browse/good-research-practice-principles-and-guidelines/

[2]FAIR principles: http://www.nature.com/articles/sdata201618

[3] EPSRC Expectations: https://www.epsrc.ac.uk/about/standards/researchdata/expectations/

[4] RCUK guidance on data management: http://www.rcuk.ac.uk/documents/documents/rcukcommonprinciplesondatapolicy-pdf/

Digital Preservation Scenario

A Researcher wants to publish a paper about their research.

The research is underpinned by a Research Dataset.

The Researcher wants to use the RDSS to publish the Research Dataset.

The Research Dataset needs to be made openly accessible and given a DOI.

The Research Dataset needs to be referenced in the paper using the DOI.

The paper will be published in a journal.

A Research Manager at the Researcher's institution is tasked with digital preservation of all published Research Datasets from their institution.

The Research Manager wants to use the RDSS to preserve the dataset.

A Research Data User subsequently wants to access the Dataset at a later date.

The Research Data User follows the DOI for the Research Dataset which resolves to the RDSS and they then request access to and download the data.

The scenario above has three main stages:

- Upload and publication of Research Data by the Researcher

- Digital preservation by the Research Data Manager

- Discovery and download of Research Data by a Research Data User

These three stages are not necessarily independent of each other or have to occur in sequence. For example, the 'interactive workflow' supported by the MVP allows for the Research Data Manager to interact with the Researcher during the data deposit and publication process and to use the preservation system to help understand the data and communicate with the Researcher about how it could be improved in a way that helps with long-term usability.

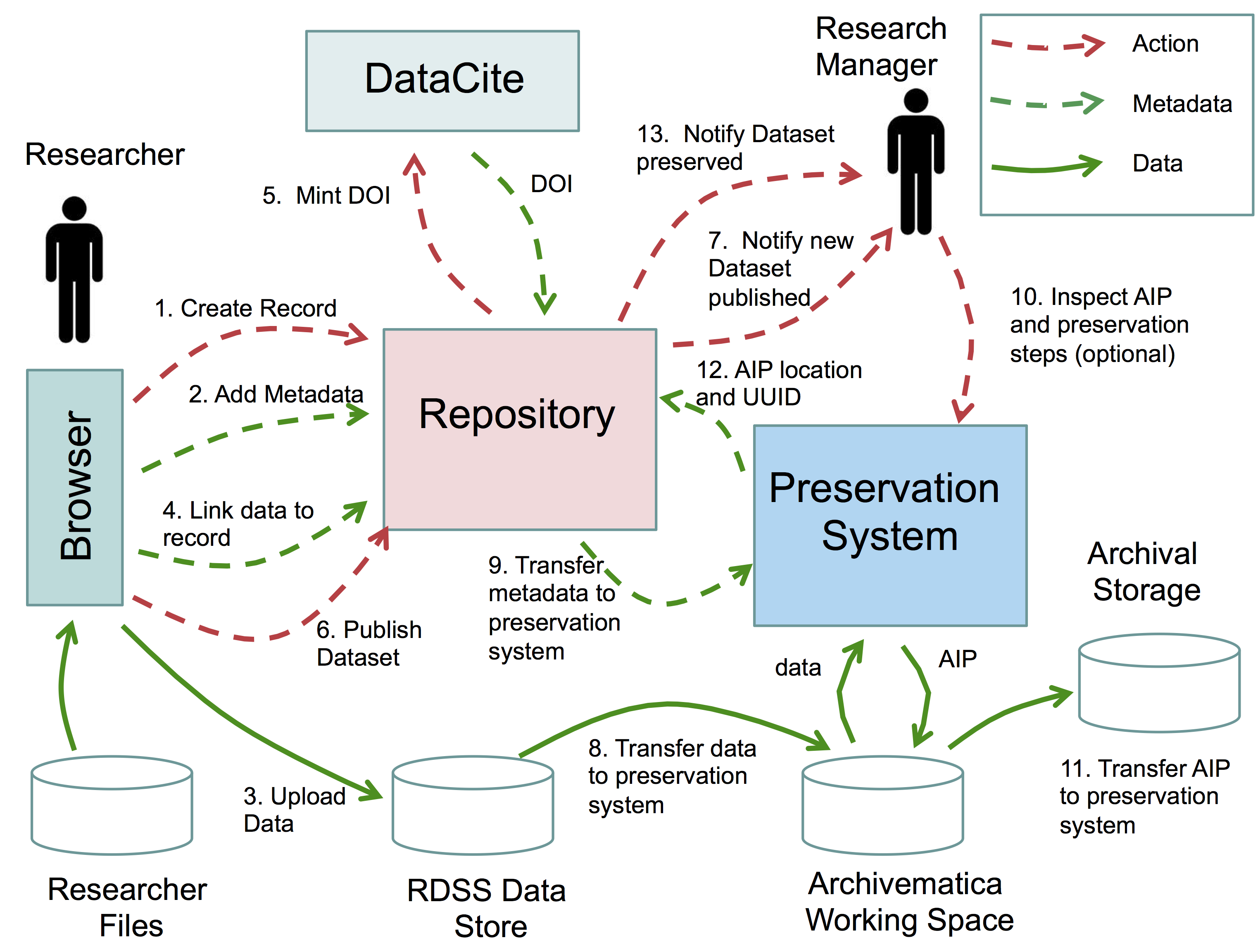

Automated Preservation Workflow

The conceptual workflow below shows how digital preservation can be used in an automated way as part of the RDSS Alpha. Whilst the diagram and description show a Repository and Preservation System, in practice other components of the RDSS will come into play, for example databases and messaging systems that mediate the interactions between parts of the RDSS and other User Interfaces that provide Researchers and Research Managers with ways in which to interact with the RDSS. These extra components and user interfaces are omitted from the diagram for the sake of clarity - our objective is to explain the overall workflow at a conceptual level rather than dive into too many details.

Dataset upload and publication stage

The Researcher creates a Research Dataset Record in the RDSS Research Data Repository for their Research Dataset. [1]

The Researcher adds basic metadata (title, subject, date, author etc.) to the record. [2]

The Researcher uploads their Research Dataset to the RDSS. [3]

The Researcher links their uploaded Research Dataset to the Research Data Record in the Repository [4]

The Researcher reserves a DOI for their Research Dataset, e.g. using DataCite. [5]

The Researcher includes the DOI for their Research Dataset in their paper.

The Researcher uses the Repository to 'publish' their Research Dataset, i.e. make it publicly accessible over the Internet.[6]

The Researcher publishes the paper in a Journal.

The Journal publishing the paper gives the paper a DOI.

The Researcher updates the Research Dataset Record to add the DOI of the paper as extra metadata.

The Researcher is happy now that their data and publication are online and can be seen by the community.

Dataset preservation stage

The Research Manager is notified that a new Research Dataset has been published by the Researcher. [7]

The Research Manager reviews the Research Data Record and decides that the Research Dataset should go through a preservation process.

The Research Manager requests that the Research Dataset is sent to the Preservation System (Archivematica) for preservation.

The Research Dataset location is passed to Archivematica.

The metadata in the Research Data Record is also passed to Archivematica, including the DOI of the Research Dataset and the DOI for the paper. [8]

The Research Dataset is copied to Archivematica's working storage. [9]

The Research Dataset and metadata go through the Archivematica preservation workflow and an Archive Information Package (AIP) is generated. More details of the workflow supported inside Archivematica can be found in the Digital Preservation Capabilities section.

The Research Manager can use Archivematica if they wish to look at the details of how the AIP was generated and its contents [10] (optional).

The AIP is given a Universally Unique Identifier (UUID).

The AIP is transferred by Archivematica to long-term archival storage. [11]

The RDSS is informed by Archivematica that the AIP is safely archived and is given the AIP UUID/location and a reference to say which Research Dataset the AIP contains. [12]

The RDSS updates the Research Data Record in the Repository with the AIP location and the AIP UUID.

The Research Manager is notified that the Research Dataset has been through the preservation process and is safely in archival storage [13]

The Research Manager is happy knowing that the dataset is preserved.

Notes:

Some institutions may want the preservation process to be automated to the extent that all datasets pass through Archivematica without any prior review by the Research Manager. We'll be investigating whether this will be possible in the MVP or whether this is something for the beta stage.

The workflow above describes a scenario where everything works correctly and there are no errors, exceptions or need for user intervention. In reality, digital preservation isn't a perfect world and therefore the MVP will also include a error handling/reporting workflow that we haven't yet documented on these pages.

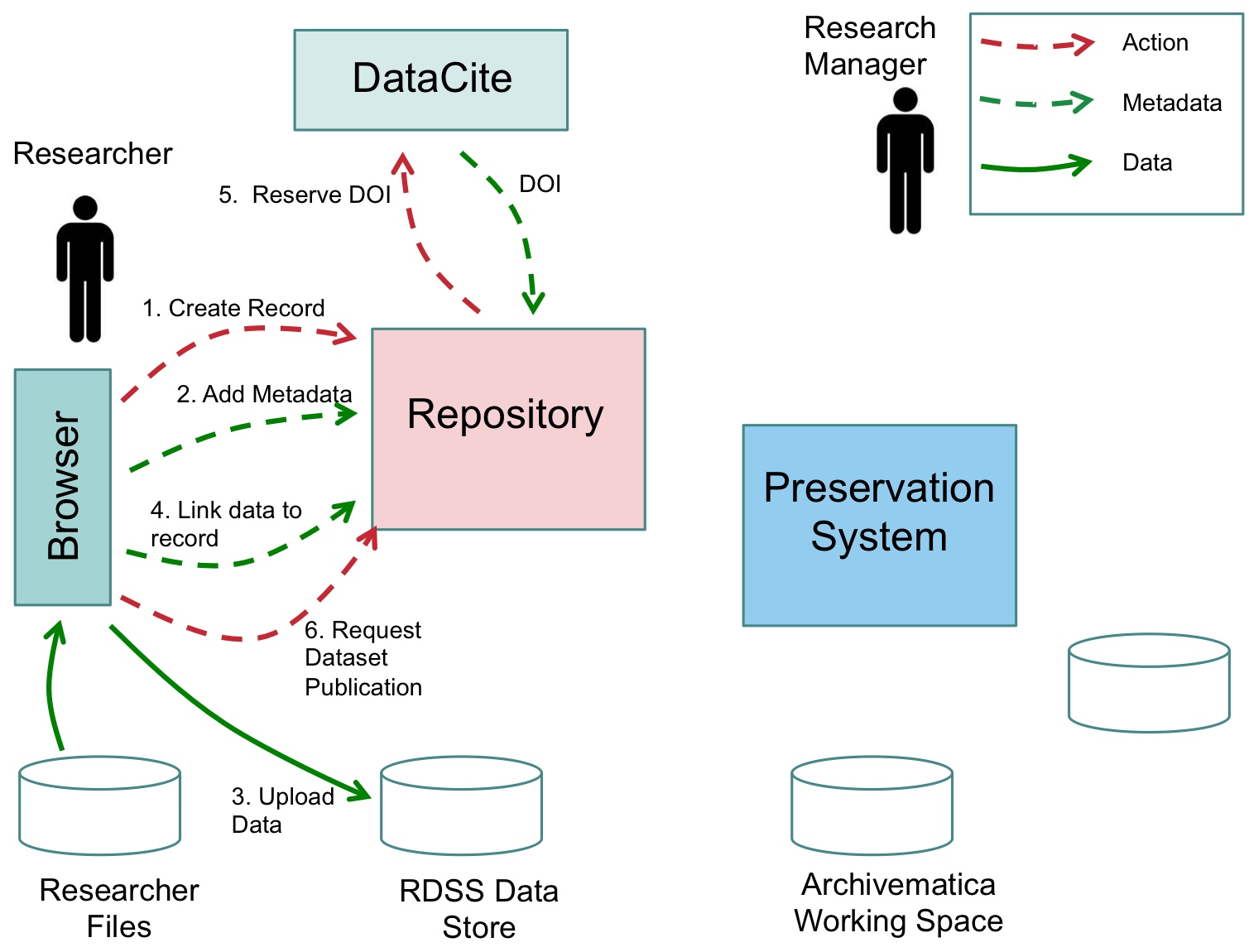

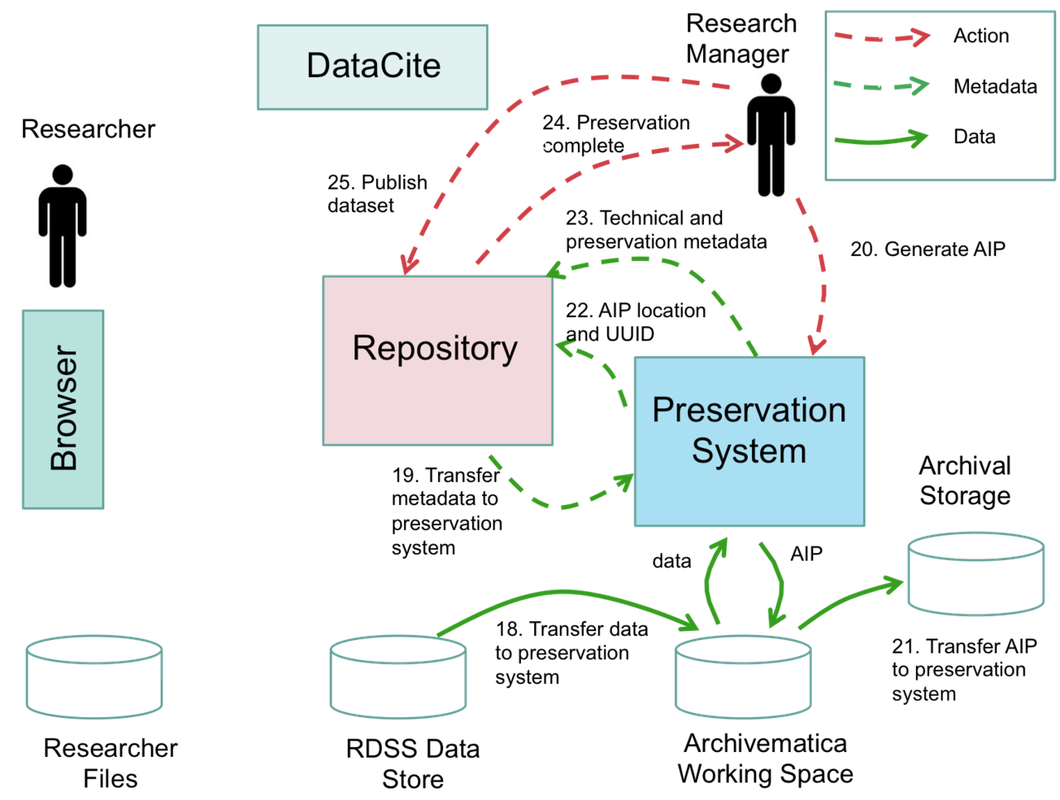

Interactive Preservation Workflow

The conceptual workflow below shows how digital preservation can be used in an interactive way as part of the RDSS Alpha. Whilst the diagram and description show a Repository and Preservation System, in practice other components of the RDSS will come into play, for example databases and messaging systems that mediate the interactions between parts of the RDSS and other User Interfaces that provide Researchers and Research Managers with ways in which to interact with the RDSS. These extra components and user interfaces are omitted from the diagrams for the sake of clarity - our objective is to explain the overall workflow at a conceptual level rather than dive into too many details.

Note: this workflow assumes that the Research Manager at an institution has already developed a policy and preservation plan for their Institution's research data, i.e. they are familiar with Archivematica's preservation planning and pipeline configuration options. See the Digital Preservation Capabilities section for more details.

Research Dataset Upload and DOI pre-allocation.

The Researcher creates a Research Dataset Record in the RDSS Research Data Repository for their Research Dataset. [1]

The Researcher adds basic metadata (title, subject, date, author etc.) to the record. This is the minimum metadata needed to complete a repository record, e.g. DataCite fields. [2]

The Researcher uploads their Research Dataset to the RDSS. [3]

The Researcher links their uploaded Research Dataset to the Research Data Record in the Repository. [4]

The Researcher reserves a DOI for their Research Dataset, e.g. using DataCite. [5]

The Researcher includes the DOI for their Research Dataset in their draft paper.

The Researcher requests that the dataset record is made public.

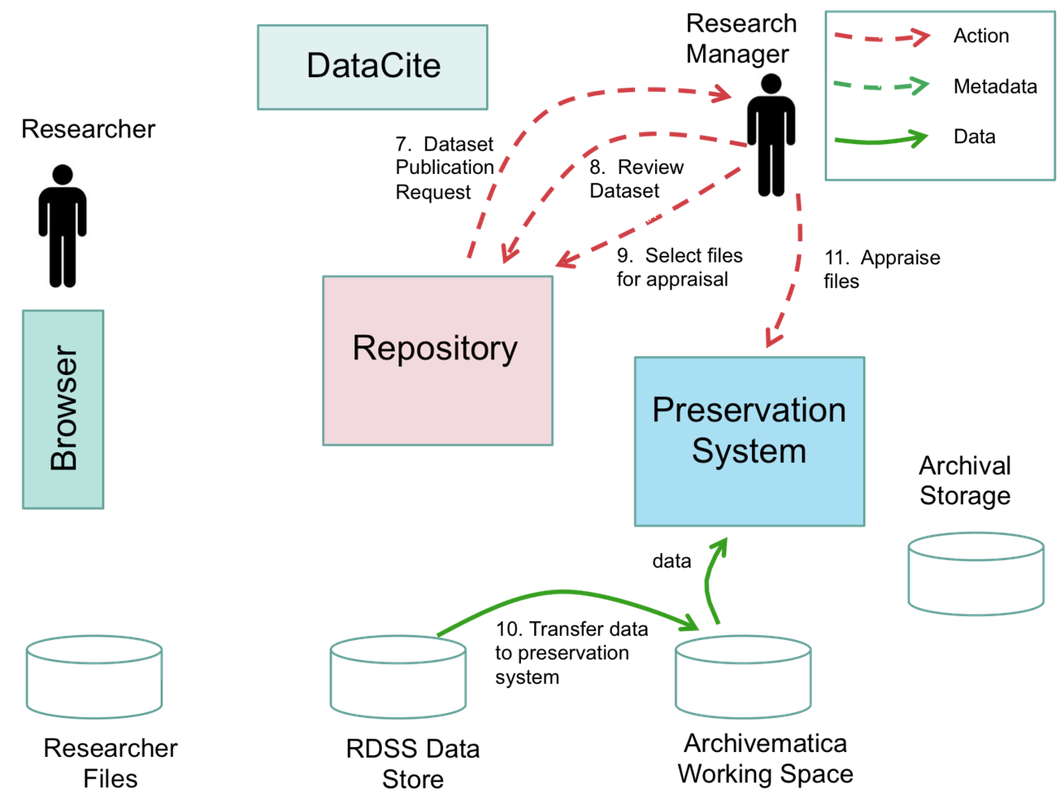

Research Dataset QC

The Researcher request for dataset publication triggers a 'review-approve' QC process that is undertaken by the Research Manager.

The Research Manager is notified by the RDSS that there is a candidate public dataset from the Researcher that needs review and approval. [7]

The Research Manager checks the files in the dataset to make sure they are well documented and in widely used or well understood formats. [8]

The Research Manager selects some files that they don't recognise or understand. [9]

The Research Manager requests that these are sent to the Preservation System (Archivematica) for appraisal.

The RDSS passes the location of the selected files to Archivematica.

The files are copied to Archivematica working storage so they can be selected and used in Archivematica. [10]

The Research Manager runs Archivematica to analyse the files and puts them in Archivematica transfer backlog.

The Research Manager uses Archivematica to appraise the files, e.g. by looking at reports on file formats. [11]

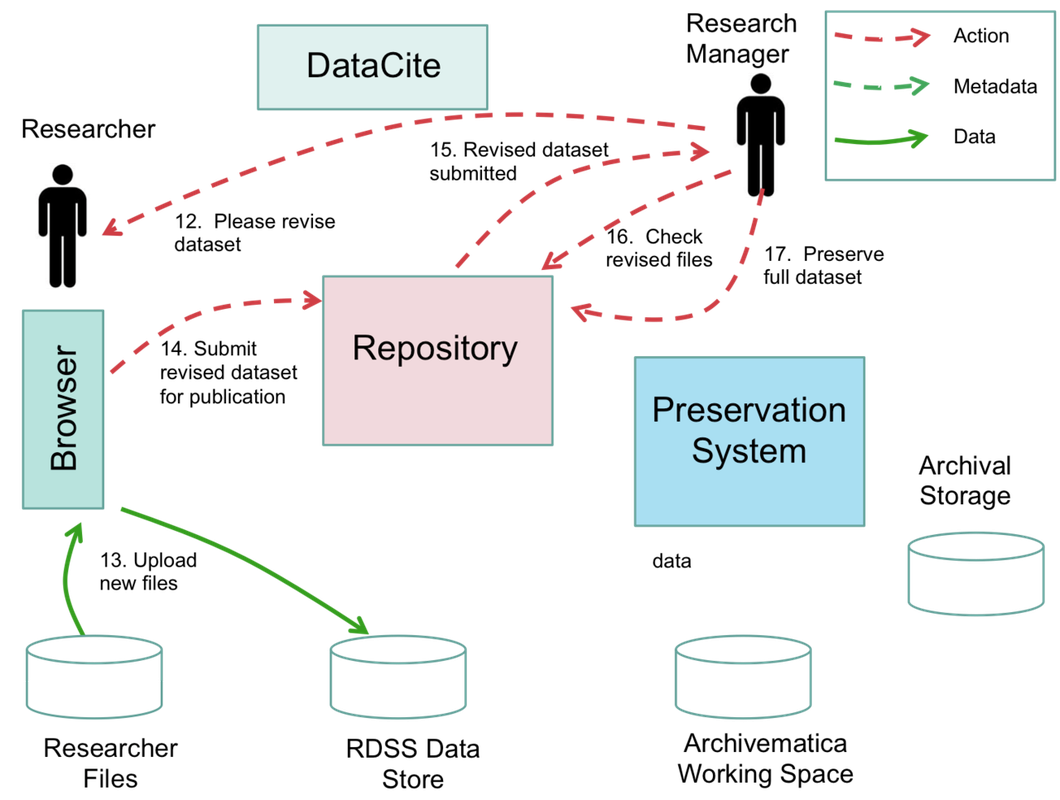

Research Dataset Revision

The Research Manager tells the Researcher that some of the files aren't recognised and the dataset needs to be revised before publication will be allowed. [12]

The Researcher uploads new versions of some of their files, e.g. after converting them into open-standard, open-specification, well-understood or widely-used formats.

The Researcher uploads extra documentation for some of their files that don't have an open format, e.g. describig what software to use to open the files. [13]

The Researcher resubmits the Research Dataset as a new candidate for publication. [14]

The Research Manager is notified by the RDSS of the revised candidate dataset from the Researcher. [15]

The Research Manager does an initial inspection to see if the Researcher has addressed the dataset issues. [16]

The Research Manager either accepts the changes or undergoes further 'negotiation' with the Researcher (which may or may not resolve all the problems).

The Research Manager decides that the dataset is now ready for preservation.

The Research Manager requests that the whole dataset is sent to Archivematica including the new/updated files and the extra documentation. [17]

Research Dataset Preservation

The Research Dataset is transferred to Archivematica working storage. [18]

The metadata in the Research Data Record is also passed to Archivematica, including the DOI of the Research Dataset. [19]

The Research Manager uses Archivematica to generate an Archival Information Package (AIP). More details of the workflow supported inside Archivematica can be found in the Digital Preservation Capabilities section. [20]

The AIP is given a UUID.

The AIP is transferred by Archivematica to archival storage for long-term retention. [21]

The RDSS is informed by Archivematica that the AIP is safely archived and is given the AIP UUID/location and a reference to say which Research Dataset the AIP contains. [22]

The RDSS is passed technical metadata generated by Archivematica about the files in the Research Dataset, e.g. the file formats. [23]

The RDSS is passed a description of the preservation actions done to create the AIP, e.g. normalisation. [23]

The RDSS updates the Research Data Record in the Repository with the AIP UUID and location, the technical metadata and the preservation metadata.

The RDSS notifies the Repository Manager that processing of the Research Dataset is complete. [24]

The Repository Manager approves the dataset for publication. [25]

The Repository makes the Research Dataset public.

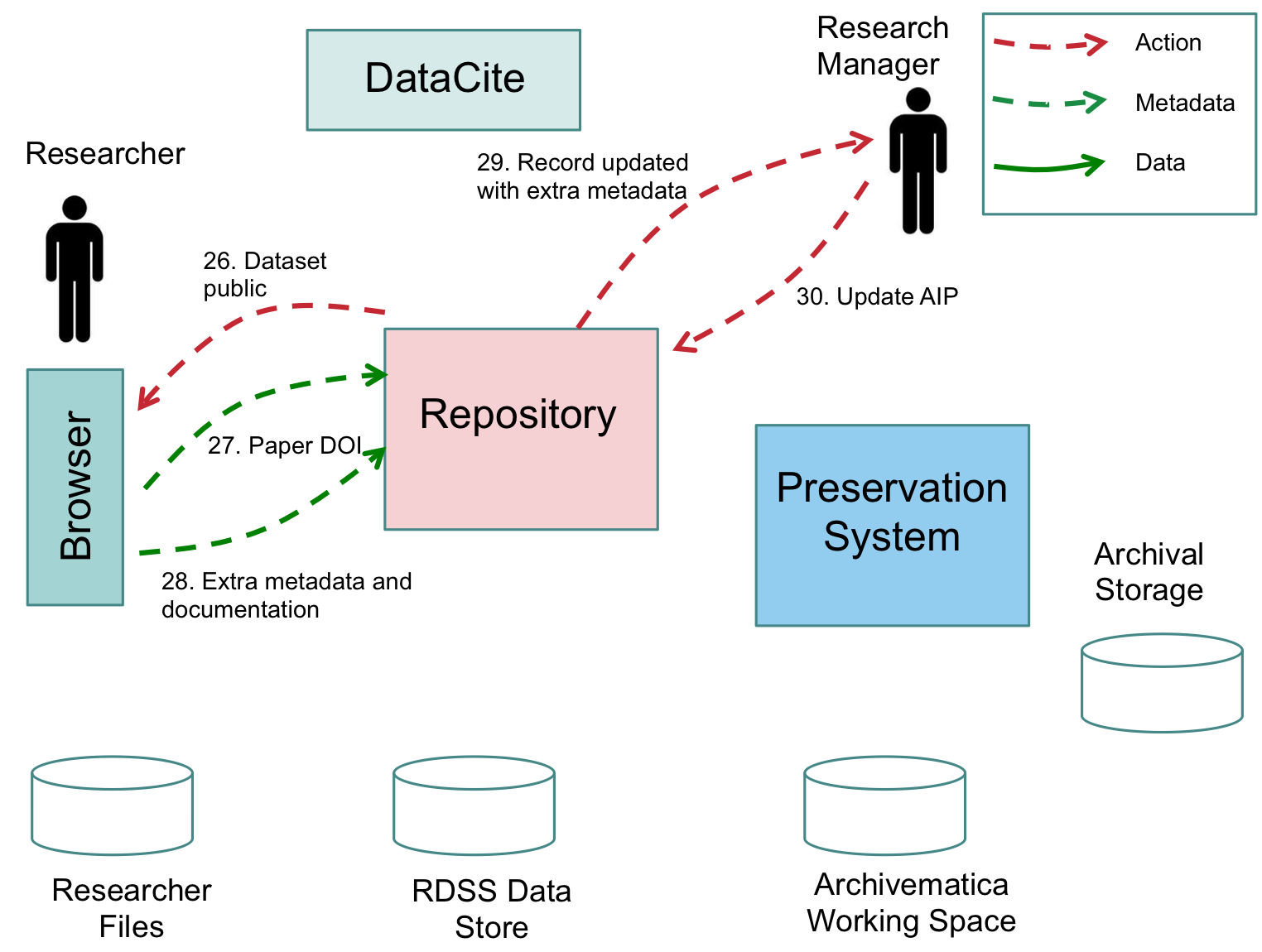

Research Paper Publication

The Researcher is informed by the Repository that their Research Dataset has been approved and has been published. [26]

The Researcher completes the paper publication process and their paper is published in a Journal.

The Journal publishing the paper gives the paper a DOI.

(Note: the above is over simplified and there may be more complex workflows depending on whether the paper is Open Access under green or gold APC models).

Research Dataset Metadata Update

The Researcher updates the Research Dataset Record to add the DOI of the published paper as extra metadata. [27]

The Researcher adds other metadata to the record, e.g. further documentation of how to use the dataset and further papers that describe the research that created/used the dataset. [28]

The Research Manager is notified that the Research Dataset Record has been updated with new metadata.

The Research Manager uses the Repository to request that the new metadata is added to the AIP.

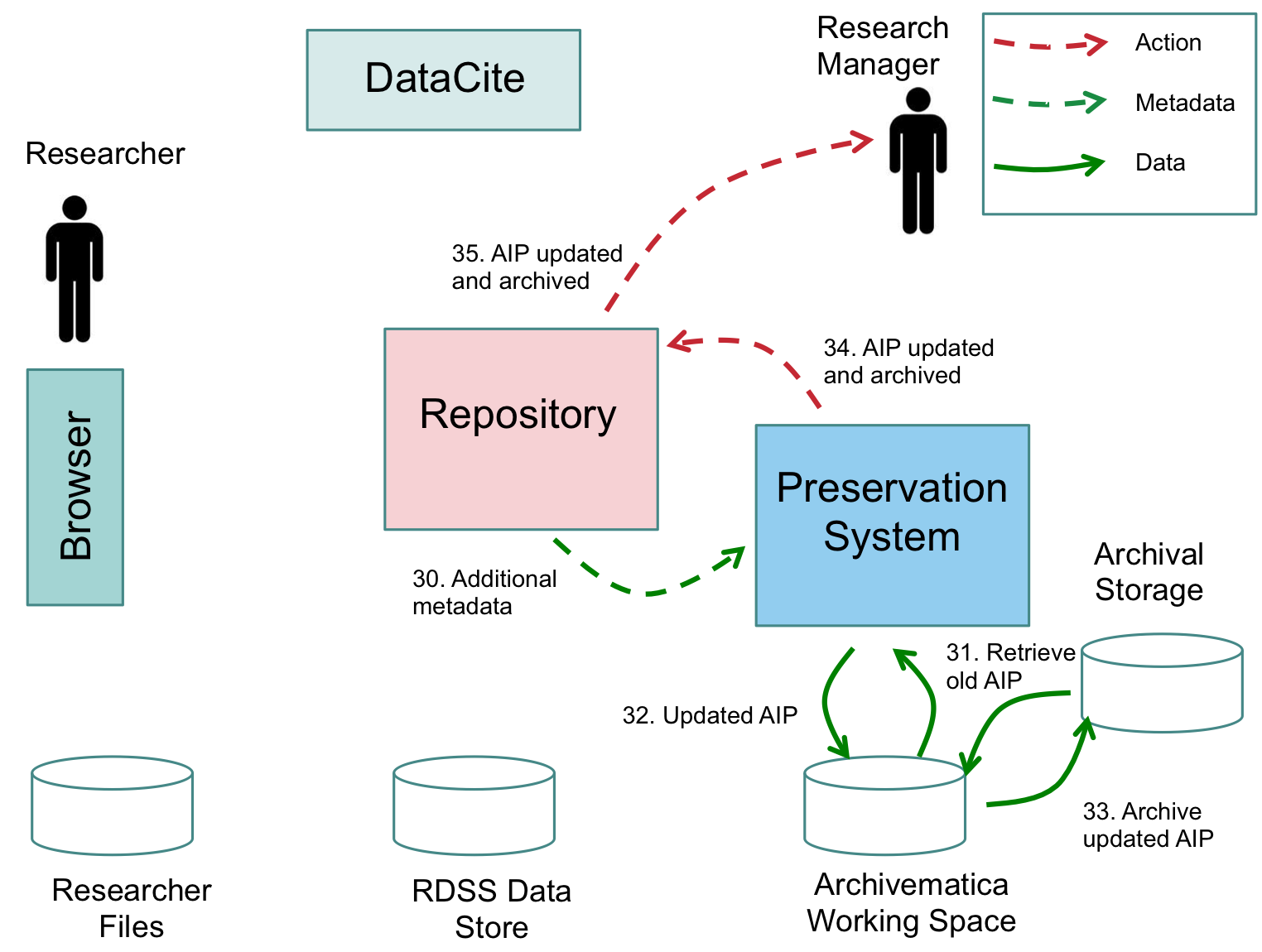

AIP Update

The RDSS sends the new metadata to Archivematica along with the UUID of the AIP containing the corresponding Research Data. [30]

The AIP is retrieved from archival storage and is re-ingested so that the new metadata can added. [31]

The AIP is updated to add the new metadata. [32]

The updated AIP is transferred to archival storage. [33]

The RDSS is notified by Archivematica that the AIP has been updated and has been successfully stored back to archival storage. [34]

The RDSS updates the Research Dataset Record with details of the updated AIP.

The Research Manager is notified that the AIP has been updated [35]

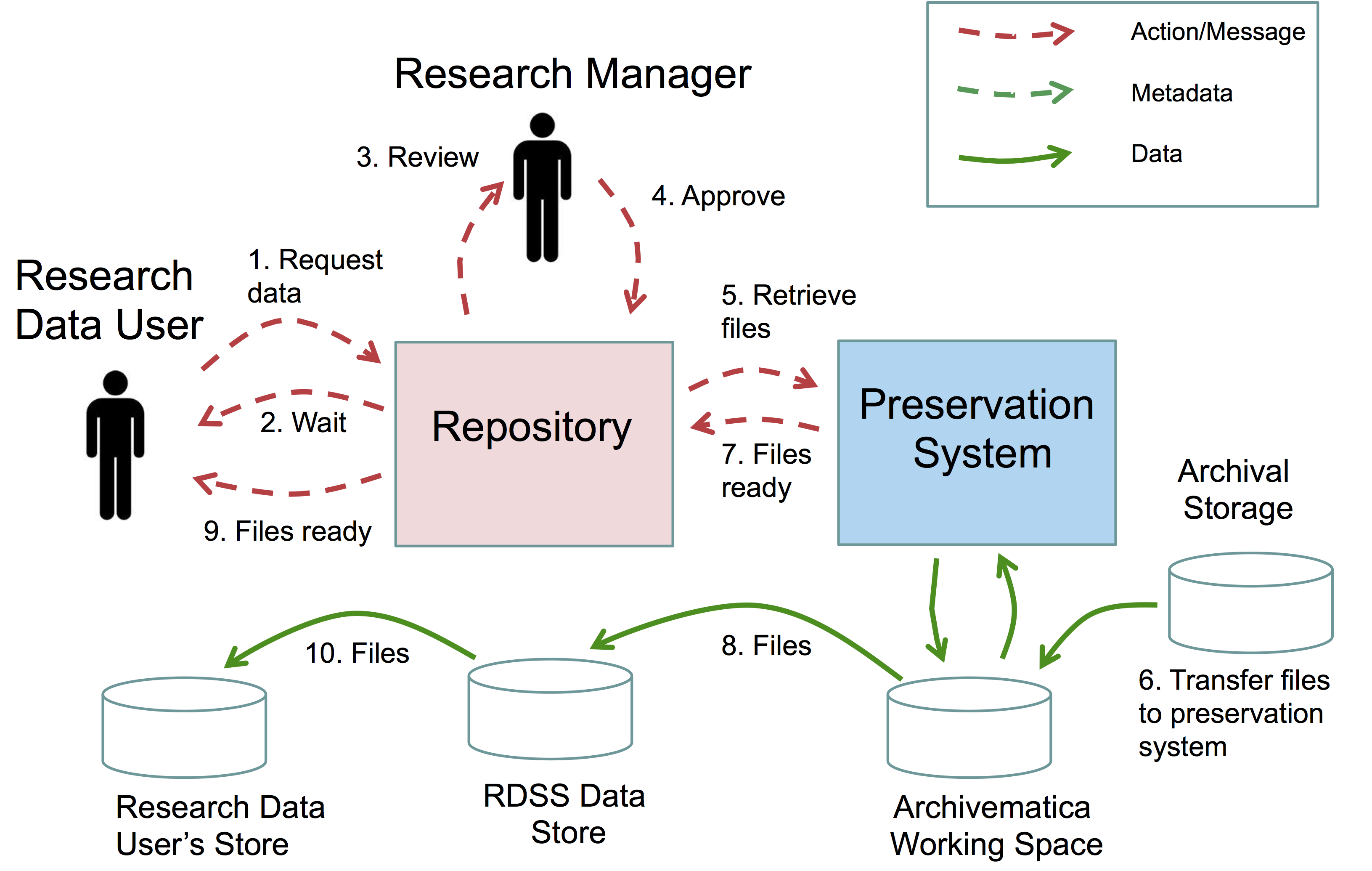

Dataset retrieval workflow

The conceptual workflow below shows how a Research Data User wants to access and use Research Data that is held within an archived AIP. Whilst the diagram and description show a Repository and Preservation System, in practice other components of the RDSS will come into play, for example databases and messaging systems that mediate the interactions between parts of the RDSS and other User Interfaces that provide Researchers and Research Managers with ways in which to interact with the RDSS. These extra components and user interfaces are omitted from the diagram for the sake of clarity - our objective is to explain the overall workflow at a conceptual level rather than dive into too many details.

In many cases, the Researcher will want to access files in a Research Dataset where that dataset is stored on the RDSS data store and can be served up immediately by the Repository. This doesn't require any involvement of the Preservation System and retrieval from AIP archival storage is not needed. This scenario is not in scope of our MVP and we expect it will be supported by the Repositories provided in Lot1.

However, in some cases the AIPs generated by Archivematica might be the only version of the Research Dataset, for example because the dataset is large or infrequently used and hence it doesn't make sense to retain the data on 'live' RDSS storage for cost reasons. If files need to be accessed from these AIPs then there needs to be a workflow to support their retrieval from archival storage so that then can subsequently be downloaded by the Research Data User. The workflow below describes this scenario.

Suppose that a Research Data User has discovered some data that they would like to use. For example, they may have followed a dataset DOI in a publication they have read, they may have found the dataset listed in a Researcher's ORCID profile, they may have searched the Jisc Research Data Discovery Service or they may have searched the Jisc RDSS Repository. Suppose that data is held in one or more AIPs that are in archival storage and it will take time to restore the data from archive so it is readily accessible to the user.

The Researcher looks at the Research Data Record in the Repository (or DOI landing page) and requests a copy of some of the files in the Research Dataset [1]

The Repository does not have a local copy of the data because it is being held as AIPs in archival storage.

The Repository tells the Research Data User that the data is archived and will need to be retrieved from the archive before the Researcher can access it. [2]

The Repository notifies the Research Manager that the Research Data User would like to access the data. [3]

The Research Manager reviews the details of the request, decides that the request is valid, and approves that the Research Data User should be given access to the data. [4]

The Repository requests that the Preservation System should restore the files from archival storage. [5]

The Preservation System retrieves the files to its local storage. [6]

The Preservation System notifies the Repository that the files are ready. [7]

The Repository copys the files from the Preservation System to the RDSS data store into an area where they are accessible to the Research Data User, e.g. AWS S3 [8]

The Research Data User is notified that the files are ready for access. [9]

The Research Data User downloads the files. [10]

Note that the workflow above is inefficient for datasets containing large files or large numbers of files. Changes/improvements could include direct retrieval of files by the Repository from archival storage. However, the workflow above does have some notable features:

- The Repository does not mediate data downloads directly, rather it coordinates the process of getting data from archival storage to a download area that the user can access. This is important when large datasets are involved because many Repository solutions don't scale well for big datasets. This is not an issue in the MVP but will be for the Beta.

- The data is retrieved via the Preservation System. This allows the data to be transformed if needed by Archivematica before delivery to the user. For example, it would allow a DIP to be created from an archived AIP. This aligns with the way that some of the pilots expect to work, i.e. generating DIPs on demand. It also allows data to be filtered before delivery to a user, e.g. because some data may have rights issues or could be embargoed. This is not an issue for the MVP because it will only target datasets that have already been cleared for Open Access.

- Archivematica mediates access to the archival storage system, which is consistent with Archivematica's current approach of having a Storage Service that abstracts storage targets and is also consistent with the OAIS model.

Digital Preservation Capabilities

The RDSS in Alpha phase will use version 1.6 of Archivematica, which provides a wide range of digital preservation capabilities. The initial pilot is a great opportunity for institutions to develop their understanding of preservation capabilities, best practice and opportunities for improvement or integration with research data management processes.

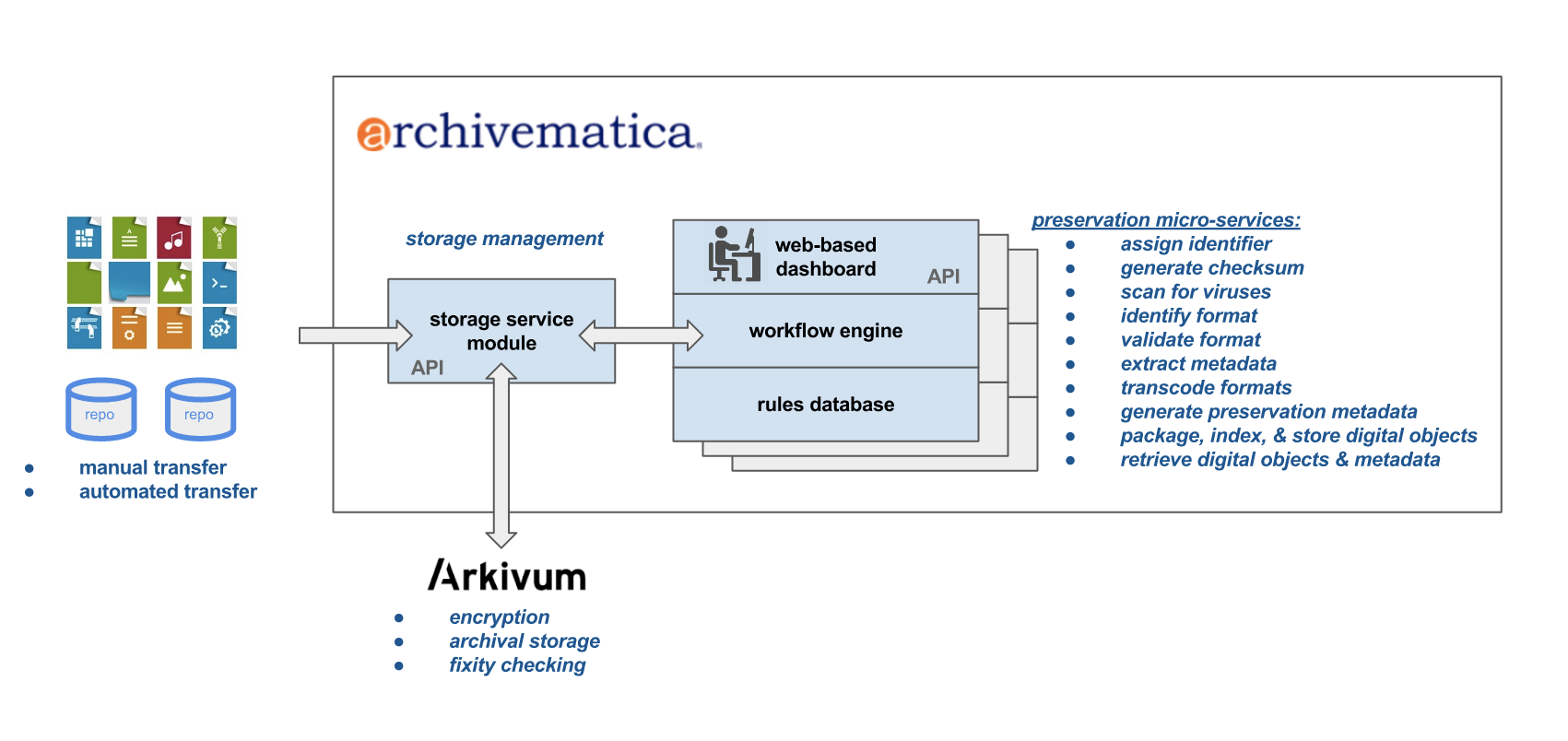

Current Archivematica-Arkivum functionality/integration

The MVP approach is to do a first-stage integration with the wider RDSS platform. The objective is not to try to deeply embed digital preservation into the RDSS until the HEI pilots have had chance to experiment with Archivematica and its preservation capabilities.

The breadth of configuration options and tools in Archivematica may also mean that more can be attempted inside Archivematica than is supported in the wider preservation platform. For example, descriptive metadata can be added from within Archivematica and it can be used to create dissemination information packages (DIPs). However, whilst DIP creation is possible in the MVP, there upload of these DIPs to external publishing systems, e.g. repositories, won't be supported. The routing of DIPs to other parts of the RDSS will be considered in the Beta.

We encourage pIlot participants to ‘learn by doing’ and to try out Archivematica which will be enormously useful in helping Jisc, Arkivum and Artefactual to understand how to further develop the RDSS integration so it can makes the best possible use of the preservation capabilities available. Additionally, the MVP and pilot projects will identify and prioritize new software development and R&D within the preservation platform that can improve the platforms and tools (e.g. format registries, DROID signatures).

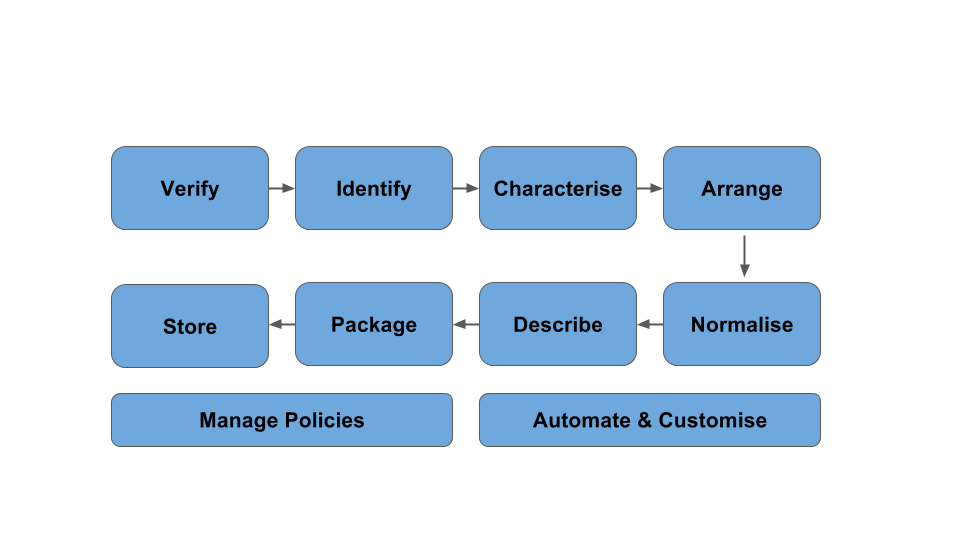

The diagram above summarises the key capabilities of the preservation platform:

- Verify that datasets are complete and secure (e.g. through fixity checks, virus scanning and quarantine processes)

- Identify the types of digital objects in a dataset (e.g. file format identification and validation of conformance to standards)

- Characterise the digital objects being preserved (e.g. extracting additional metadata using tools that interrogate the objects themselves) so that they can be better understood or rendered later

- Arrange the datasets and associated metadata to improve coherence, navigation and usability

- Normalise or migrate digital objects to open and widely supported standards to reduce the risk of format obsolescence

- Describe the datasets with additional metadata (in open and well-used standards) so that future users can understand what has been preserved, how it can be used and that they can trust it (authenticity and provenance)

- Package the datasets and metadata into self-describing packages that can minimise any dependencies or reliance on repositories we use today (“AIPs"), or simplify the contents provided for access and use ("DIPs")

- Store multiple copies of datasets in multiple locations and different media (disc, tape) to ensure safety and security, while helping to manage the cost of long term storage

The process above can be repeated if necessary at a later date, i.e. it can be used in a cyclical way and not just as a one off. For example, AIPs created in the past can be re-ingested and processed again in order to:

- add new metadata

- repeat file format identification and normalisation because new rules or tools become available

- create new DIPs

The core preservation capabilities are supported by underlying administration and management features:

- Manage policies to codify best practices or institutional requirements and ensure consistent application of preservation rules

- Automate and customise workflow using automation tools (for example, specifying preservation steps that should be skipped or done in a different sequence)

Beyond the MVP

The MVP for the Alpha has two exemplar workflows of what's possible using the current capabilities of Archivematica and a simple integration with the RDSS.

In the Beta stage of the project we'll have lots of opportunity to revise and extend these workflows. This page lists some of the areas we've already identified that could be supported in the next round of development.

We are also hoping for lots of feedback from the HEIs both on the MVP workflows as documented on these pages and also from hands-on experience with Archivematica in the Alpha stage of the project - both of which should help us refine/extend the list of 'what to do next'.

Some areas to consider in the Beta include:

- Archivematica could be used to generate AIPs as part of a dataset QC process in advance of records being created in the Repository or data being made public. This is the model described in the Filling the Digital Preservation Gap project (third report)

- Archivematica could be used to generate DIPs that are then automatically pushed to a Repository for dataset access. The DIP could be generated in advance or it could be generated on-demand, for example

(i) in response to an access request,

(ii) because new dissemination file formats are available, or

(iii) because the underlying AIP has been updated . The Alpha already supports DIP generation because this is out-of-the-box functionality in Archivematica. In the beta we could explore whether

(a) research data DIPs need any additional special features and

(b) where the DIPs should go, e.g. passed to a repository or data publication platform. - In the Beta, we are expecting HEIs to want to do digital preservation on data that isn't cleared for public access, e.g. because it's embargoed, contains personal data, has copyright or other IPR issues etc. In some cases the data could be sensitive and access may need to be tightly controlled, even within an institution. Therefore, we'll need to look at how personal/sensitive/confidential data can be supported in the preservation platform, e.g. though dedicated pipelines, control over which users can see which datasets, policies that determine where AIPs get stored etc.

- If Archivematica doesn't recognise the format of one or more files then the Research Manager could be invited to make a submission to the TNA for new signatures to be added to PRONOM.

- If new file format signatures or FPR tools become available then existing AIPs could be re-processed to have new signatures/rules/tools applied.

- The Research Manager may want to use more than one file format identification tool, e.g. based ona first pass of file format identification they might select one or more tools to apply in a second pass. There are lots of format identification tools (file, tika, droid, fits, siegfried etc.) and they don't always produce the same results.

- Different types or research data or different institutions will warrant different configurations for Archivematica pipelines. Recognising this there are several workflows that could be supported:

- Templated configurations for Archivematica pipelines could be authored and shared between institutions.

- An institution might want to have multiple pipelines with rules/routes that cause incoming research datasets to be directed to the appropriate pipeline for processing. *

- If a Research Manager is appraising files within Archivematica then this might be the easiest place for them to tag/annotate files with extra metadata. There would need to be a workflow that allows this metadata to be pushed to the Repository record for the dataset.

- By the time an AIP has been created, archived and accessed, there are potentially several copies of the data hanging around. These include the

(a) researchers original copy in their environment, i.e. pre-upload to the RDSS,

(b) the copy as uploaded to the RDSS and held in RDSS storage prior to preservation,

(c) copies of the AIP or files within it that have been restored to RDSS storage in order to service data access requests.

There should be some form of workflow to allow these copies to be deleted - but only when safe to do so, e.g. the Researcher should not delete their original data until the RDSS confirms that it has been successfully added to an AIP and is in safe storage. - Data integrity is important in the research world, by which we mean that a dataset remains complete and correct in terms of its constituent data files, metadata, organisation etc. There should be some form of workflow to help with end-to-end data integrity, e.g. so it can be demonstrated that a set of files and metadata go all the way from the Researcher's local environment, up to the RDSS, through preservation and into an AIP with no loss of content at any step. There is basic support for some of this already in Archivematica e.g. submitting data in Bagit bags, but this probably needs to be extended.

- Metadata entered by the Researcher into the Repository for the MVP workflow could be as little as the mandatory fields needed to create a record in the repository, e.g. DataCite or RIOXX. However, there may be lots more discipline specific metadata available that is either optional in the Repository schema or not supported at all. In the Beta we could explore how to handle this, e.g. how does it get uploaded by the researcher and passed through to Archivematica for inclusion in the preservation (AIP) or access (DIP) packages?

- Unrecognised formats. Unrecognised formats [1][2][3][4] are common in the research data domain and within data repositories more generally. In the Beta we could explore how information on unrecognised formats (e.g. stuff not in PRONOM) could be captured and shared between institutions.

- Preservation planning. Archivematica supports a File Policy Register that says how to handle various file types, e.g. rules for characterisation, validation and normalisation. The Beta could explore how to establish a shared/community FPR between institutions and more widely how to better support the development and implementation of preservation policies and plans.

- Some institutions may want a 'pre-transfer' area where they can run tools to understand/appraise data before deciding what is transferred to Archivematica for preservation. Do we need to support this model in the Beta? How does this type of exploratory/appraisal activity relate to the appraisal work that can be done inside Archivematica, e.g. using the new 'appraisal tab' in Archivematica 1.6.

[1]http://digital-archiving.blogspot.co.uk/2016/05/research-data-what-does-it-really-look.html

[2]http://blog.kbresearch.nl/2015/04/29/top-50-file-formats-in-the-kb-e-depot/

[3]http://www.slideshare.net/repofringe/repo-fringe-v0-01-gollins

[4]https://speakerd.s3.amazonaws.com/presentations/a2fd5875bd0346a4be56f14d7900f015/dpc-searching-for-obsolescence.pdf

Updated almost 9 years ago